Database testing and ETL processes

Unit testing using tSQLt is really great, you call FakeTable on any tables your code touches and then insert the columns and rows your test actually need, nothing more, nothing less but what happens when you have more complicated processes like an ETL process?

TLDR: the same as unit testing, just you probably don’t use tSQLt to mock the tables.

What types of test are there?

Tests get larger and more complicated the further away from the code, so first off we have unit tests which are very close to the code. A unit tests typically understands how one single unit of code works and so setting up data is the simplest, we just have the rows and columns we need for that test (I know i’ve repeated it, I repeat to to myself over and over and over again).

The next type of test, the type we will talk about here is integration testing, and I stray away from the original definition of integration testing (i.e. integrating with other systems) but where we move away slightly from the code and instead of knowing about just one unit of code we think in terms of just one process. A process could be an ssis package, a powershell script or a “custom something” (i’m trademarking that name btw).

Past integration testing we should be looking to do some performance or functional testing with something resembling production data, this might be a copy from production or some other dataset, wherever it comes from it should have realistic volumes and patterns of data that will match production.

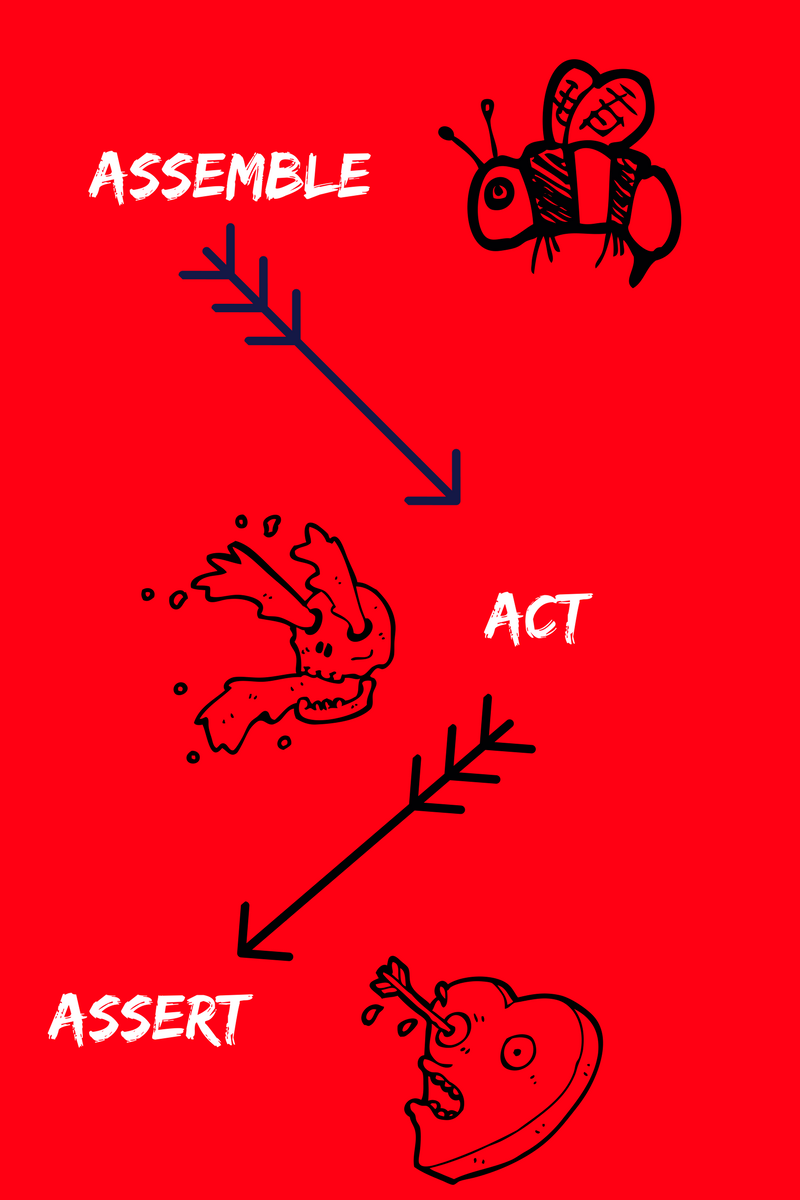

So, what and how do we test this mid-layer between unit tests and full-scale performance tests? Well, the first thing to say is that whatever type of test they should still follow this assemble, act, assert pattern:

Assemble

In tSQLt, we can call tSQLt.FakeTable and then do an insert, if we don’t use tSQLt what do we do? Well, we need to setup the data we want, this could be by using a tool or by writing a load of insert statements. I have seen this done in various ways such as:

- Writing manual insert scripts

- Using a tool to setup the data

- Making use of API’s in the application to setup the data we need

- Some wierd and wonderful things that we shouldn’t recommend

Ultimately, for each test that you do you need to know what data you need for it. There isn’t really any way around this, and the sooner you get yourself in the position where you can setup the data you need for a test, the better.

I have worked on projects where the data setup for the first set of tests we implemented was so complex it took over a week to get to the point where we could setup the data we needed and then start writing tests. This seems like it is excessive but the data requirements were complicated, the work that we were doing was complicated because of decisions outside of our control and by spending the time to get the data right for tests, we could then proceed to write tests and as a side effect code more efficiently and increase our productivity in the long term. If it weren’t for the initial work getting the test data right then, we would have ended up taking longer, guaranteed.

Once you have your data in place for a test, you are now ready to act…

Act

In this phase you need to execute your process, if this is SSIS then I personally find it easier to use dtexec.exe and pass in a whole load of command line arguments to execute a package. I realise that there are a few different deployment scenarios for SSIS with different settings but if you can get to the point where, from your tests, you can call dtexec, wait for it to finish and then check the result you will be in a very good place.

The complexity of this phase depends very much on what it is you need to execute. If you are using SSIS then you should be able to use dtexec to run a package, if this is another tool, then you need to either find a command line tool that will execute it, an sdk that can be used from python, powershell, javascript etc. or an api you can call. If it is a service you need to call, then you will need to find some way to deploy the changes.

I worked on one project where we were writing code for the SAS DataFlux tool, typically the way it had been used was someone would copy xml to the server or manually edit a job until they were happy. The developer would then run the package via the DataFlux ui and verify that it worked. To push the changes up from dev to test and prod involved manually editing the package in the ui and copying and pasting it into the next environments folder.

When I started looking at the tool I saw quite quickly the xml files were stored in a local folder on the server so looked at deploying that way, this worked, but we couldn’t execute the packages. Further investigation found that the tool had an api that the supplied ui used, so a bit of network parsing and a rarely-used api documentation pdf gave us enough information to deploy and then run packages. In about a day or so, we took a tool which had been almost entirely driven manually to something we could deploy and execute integration tests against.

This act phase can often be quite daunting, when working with SSIS I have written whole c# classes to generate the correct arguments with the correct number of backslashes to make dtsexec.exe happy, so this phase is often quite hard, but once you have it right then it can be re-used for all of your tests at this layer.

Once you can execute your code reliably from a test, you can then move to the final stage, Assert…

Assert

There are a couple of things you will want to do in this phase, and the first is verify that the Act stage completed successfully, if you are running a command line tool like dtsexec.exe then was the process status code a success? A success on windows is typically a 0. Make sure you capture stdout and stderror and if you need to parse those for positive and negative statements. One thing that is always useful is saving the output and results, if you get a non-positive result then output the process response code and any stdout/err messages.

If you are calling an API, did you get an HTTP 200? If not what did you get, log the contents of any HTTP requests and responses, including the headers.

Once you know whether the operation succeeded or not, run some scripts against your data and make sure it is right. If in your setup you created the data for a new user with a unique guid as the name, check whether that user actually exists or not.

That is it really, all you need to do is to setup some data, run a process and then verify that the process succeeded and the data is in a state you expect it to be.

Happy Testing!