spark-dotnet examples - reading and writing csv files

How do you read and write CSV files using the dotnet driver for Apache Spark?

I have a runnable example here:

https://github.com/GoEddie/dotnet-spark-examples

Specifcally:

https://github.com/GoEddie/dotnet-spark-examples/tree/master/examples/split-csv

Let’s take a walkthrough of the demo:

Console.WriteLine("Hello Spark!");

var spark = SparkSession

.Builder()

.GetOrCreate();

We start with the obligatory “Hello World!”, then we create a new SparkSession.

//Read a single CSV file

var source = spark

.Read()

.Option("header", true)

.Option("inferShchema", true)

.Option("ignoreLeadingWhiteSpace", true)

.Option("ignoreTrailingWhiteSpace", true)

.Csv("./source.csv");

Here we read a csv file, the first line of the file contains the column names so we set the option “header” to true. We want to take a guess at the schema so use the option inferSchema. Because the csv has been written with lots of whitespace like “col1, col2” - if we didn’t ignore leading whitespace our second column would be called " col2" which isn’t ideal.

//Write that CSV into many different CSV files, partitioned by city

source.Write()

.Mode(SaveMode.Overwrite)

.Option("header", true)

.PartitionBy("city")

.Csv("./output-partitioned-city-csv");

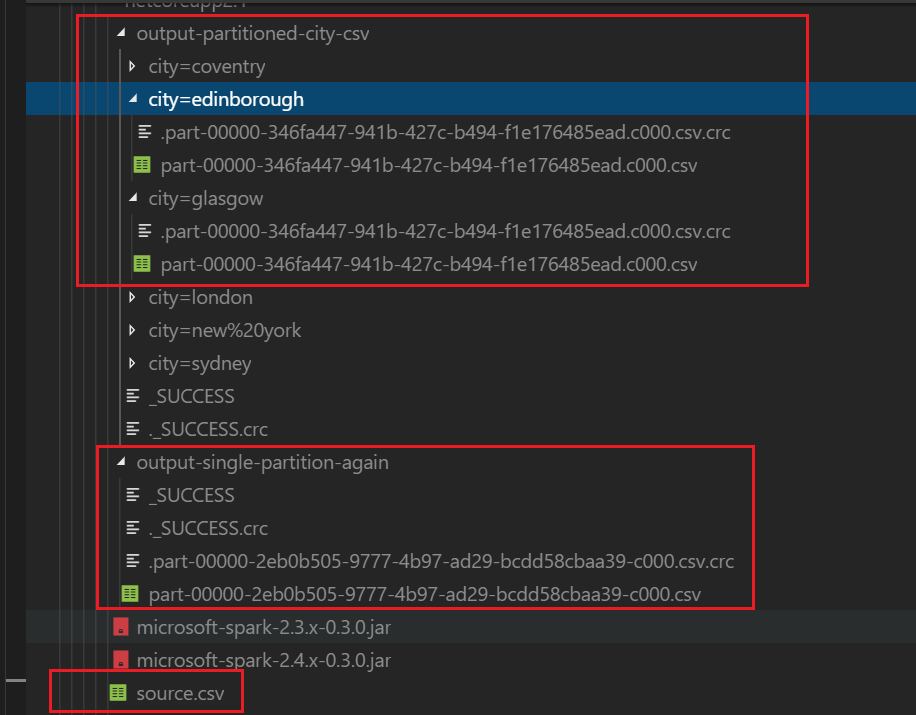

We now take that single csv file we read in and write it back out, but instead of writing to a single file we break the csv’s into multiple csv’s split on “city”, imagine trying to do that in SSIS!

Now we have a set of csv files, we can read them back in as one data set, we can specify the folder they are in rather than each individual csv file:

var partitions = spark

.Read()

.Option("header", true)

.Option("ignoreLeadingWhiteSpace", true)

.Option("ignoreTrailingWhiteSpace", true)

.Schema("name string, age int, city string") //instead of inferSchema we specify the schema

.Csv("./output-partitioned-city-csv");

partitions is now a dataframe that contains all of the csv’s we created earlier, neat hey? Notice also that we can specify the schema really easily using .Schema("name string, age int, city string").

partitions.Write().Mode(SaveMode.Overwrite).Option("header", true).Csv("./output-single-partition-again");

Write the dataframe, of all the csv’s, back into one csv file.

Here we see that a single csv “source.csv” was read, multiple csv’s partitioned by “city” were written and read and then finally a single csv was written back out again: