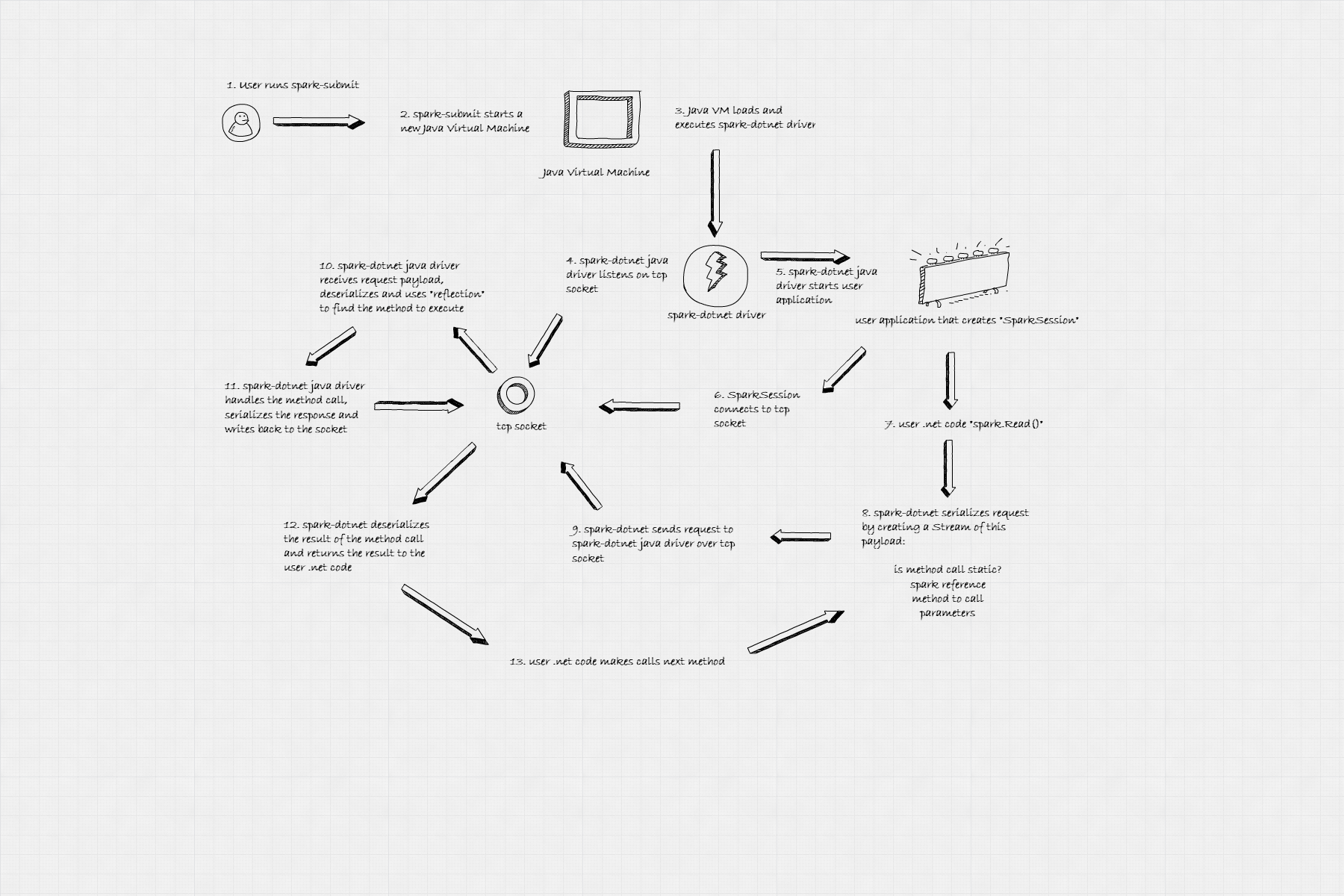

spark-dotnet how does user .net code run spark code in a java vm

Apache Spark is written in scala, scala compiles to Java and runs inside a Java virtual machine. The spark-dotnet driver runs dotnet code and calls spark functionality, so how does that work?

There are two paths to run dotnet code with spark, the first is the general case which I will describe here, the second is UDF’s which I will explain in a later post as it is slightly more involved.

The process goes something like this:

1. The user runs spark-submit + 2. spark-submit starts a new Java VM

Spark-submit is a script that calls another script via another script to get to spark-class2 which creates a load of environment variables and runs “java blah blah”. The blah blah bit is, amongst other things the name of the spark-dotnet Java driver i.e the Java class org.apache.spark.deploy.DotnetRunner.

3. Java virtual machine executes spark-dotnet java driver

The Java virtual machine takes the name of the spark-dotnet java driver and starts the driver.

4. Spark-dotnet Java driver listens on tcp port

The spark-dotnet Java driver listens on a TCP socket. This socket is used to communicate between the Java VM and the dotnet code, the dotnet code doesn’t run in the Java VM but is in a separate process communitcating with the Java VM via that TCP postrt. The year is 2019, we serialize and deserialize data all the time and don’t even know it, hell notepad probably even does it.

5. Spark-dotnet Java drier starts user application

The spark-dotnet java driver launches the user application. There is one exception, we can start the driver in a debug mode which means the driver sits there waiting for an application to start up, but in normal operation, the Java driver executes our dotnet user code.

6. SparkSession connects to TCP socket

When the user application wants to use spark, it calls:

var spark = SparkSession

.Builder()

.GetOrCreate();

This code connects to the socket that the Java driver has started and we have a communication channel between our code and the Java driver (yay!).

7. User .net code “Spark.Read()”

The user code decides it needs to do something so calls a method such as “Read”, each method is like a request that will be made inside the Java VM and comes with an optional amount of parameters, in this case, there aren’t any parameters, but some methods do have parameters.

8. Spark-dotnet serializes the request

The user dot net code calls the Read() method which is in the Microsoft.Spark dotnet library – this creates a payload that can be serialized, the payload contains a reference to the object the method was called on, the name of the method and any parameters. In this case, the payload would look like:

“Spark” object reference + “read” (note lowercase as is the scala naming standard). There are no parameters. Otherwise, they would be part of the payload.

9. Spark-dotnet sends the request to TCP socket

The dotnet library sends the payload down the TCP socket to the spark-dotnet Java driver.

10. Spark-dotnet Java driver receives payload, deserializes and finds the method to call

The Java driver (written in scala!) receives the payload, deserializes it and then finds the method to call – the method to call depends on the type of object that it is on or if it is static, the type of object it is on and then any optional parameters.

11. Spark-dotnet Java driver handles the method call

The spark-dotnet Java driver calls the method with any possible parameters and waits for it to complete. When it completes it writes the response back down the socket.

12. Spark-dotnet deserializes the response

The dotnet Spark library then receives the response, deserializes it and returns the result to the user dotnet code.

13. User dotnet code makes next call

The user code then makes the next call, probably .Option in this case and the process from step 8 repeats ad-infinitum.

That’s it, hopefully this has explained how the process works and remember this isn’t for UDF calls, that is some more fun waiting for another day.