Delta Lake from .NET in a Spark Connect gRPC world

UPDATE - I have implemented delta in spark-connect-dotnet

What to do?

At some point we will want to do something with delta lake and so I wanted to explore the options. Before we do that there is a little explaining to do about delta lake and Spark. There are two completely separate sides to this, the first is getting Spark to read and write in delta format and the second is performing operations on the factual files directly without using Spark, operations like Vaccum etc.

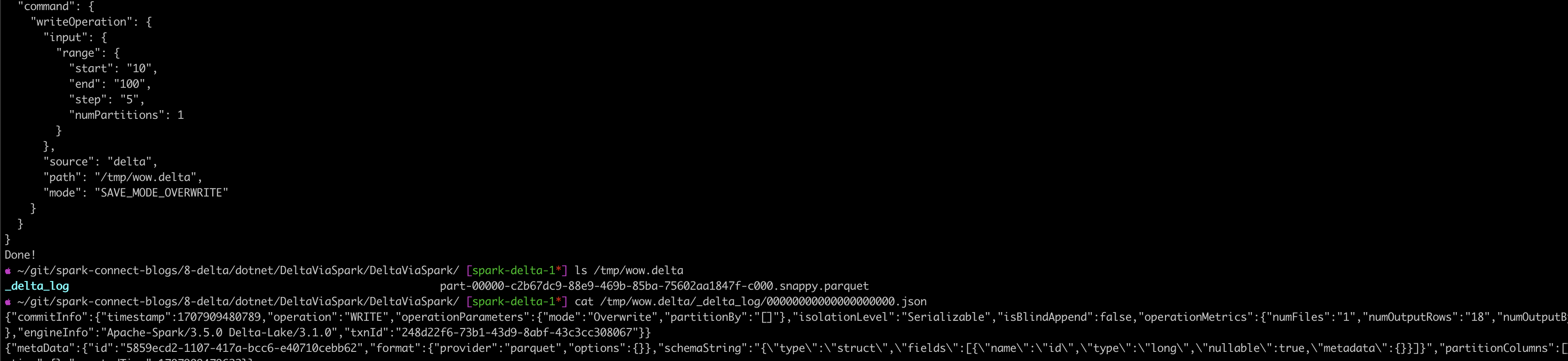

The first side of this is simple and supported out of the box, when we read or write we create a plan with the delta format specified:

WriteOperation = new WriteOperation()

{

Mode = WriteOperation.Types.SaveMode.Overwrite,

Source = "delta",

Path = "/tmp/wow.delta",

Input = rangeRelation

}

If we start our Spark Connect server with the delta package and the config options:

$SPARK_HOME/sbin/start-connect-server.sh --packages org.apache.spark:spark-connect_2.12:3.5.0,io.delta:delta-spark_2.12:3.1.0 --conf "spark.sql.extensions"="io.delta.sql.DeltaSparkSessionExtension" --conf "spark.sql.catalog.spark_catalog"="org.apache.spark.sql.delta.catalog.DeltaCatalog"

Then we can read and write delta:

Then there is the other side of the story, how to use the Delta Lake API directly. For this there are a few choices:

- Write a .NET library that performs the Delta Lake operation.

- Use the gRPC to call the Sql functions.

- Use interop to call the Rust library.

- Use a Scala class to proxy requests.

- Anything else?