Spark Delta Lake, Updates, Deletes and Time Travel

When you use delta lake there are a couple of interesting things to note based around the fact that the data is stored in parquet files which are read-only and delta lake includes the ability to delete and update data and view the state of a table at a specific point in time. Obviously read-only and updates and deletes don’t exactly sound like they work together, so how does it all work and what do we need to be aware of?

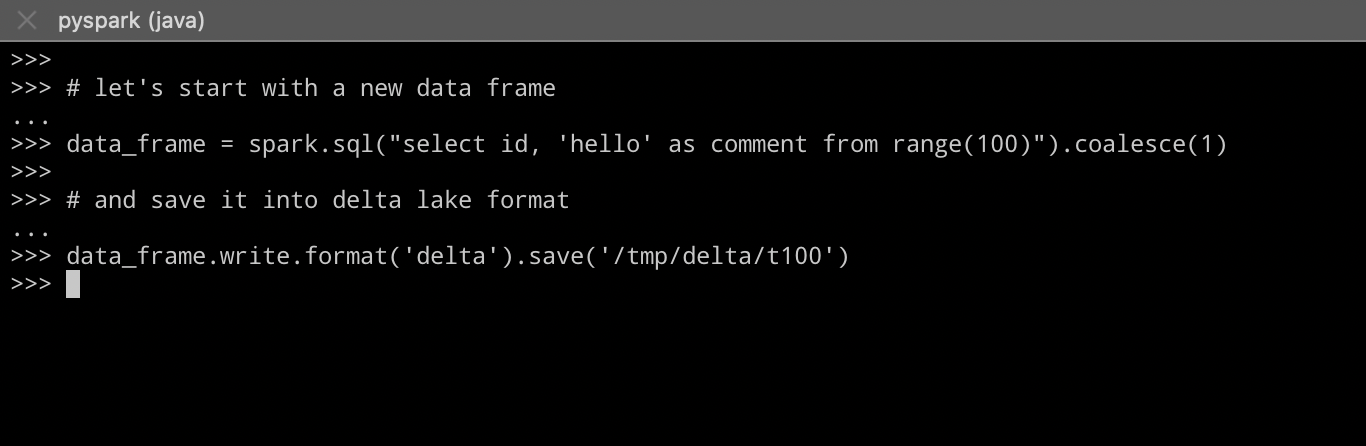

Let’s start by creating a new data frame and save it in delta format:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-1.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-1.png)

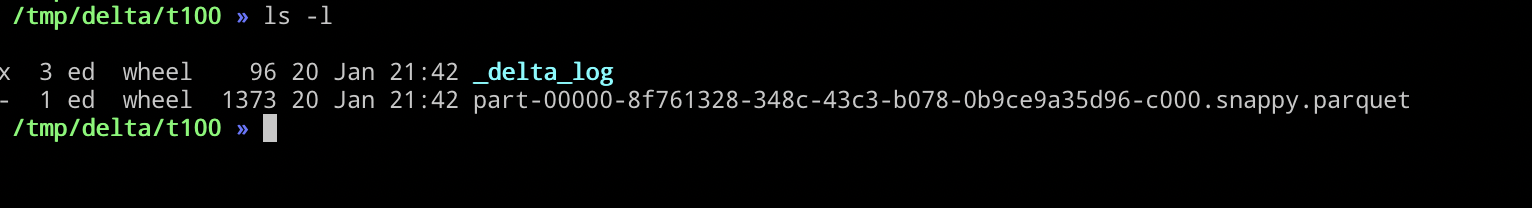

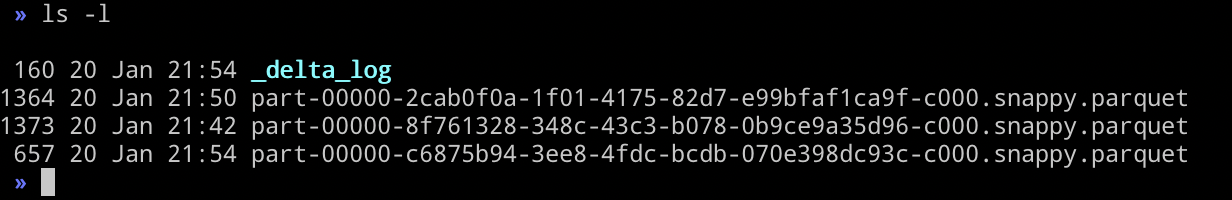

If we look at the file system we can see we have a single parquet file:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-2.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-2.png)

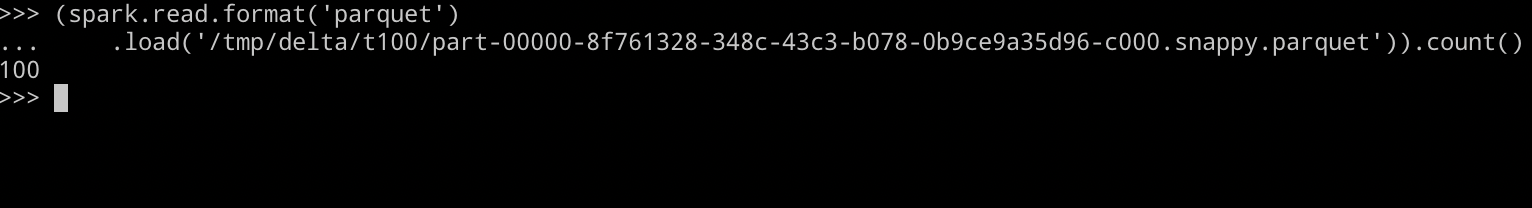

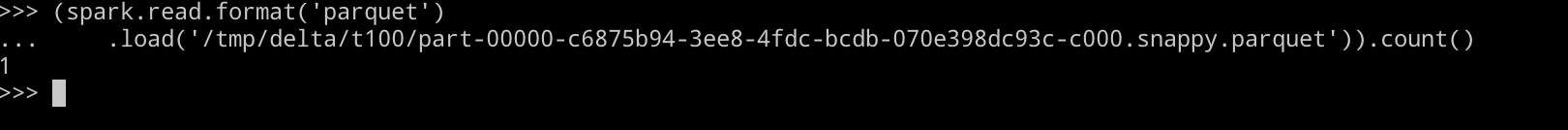

If we load that one parquet file we can see that it has one hunded rows:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-3.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-3.png)

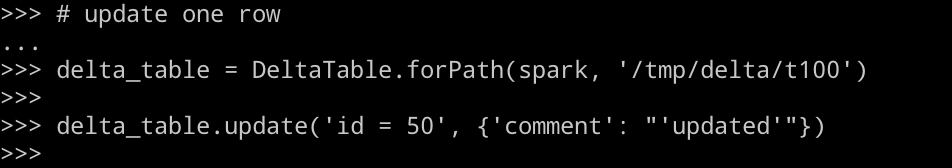

Now lets update one row:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-4.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-4.png)

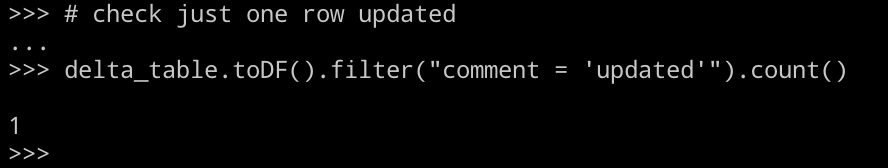

and check that just one row was updated:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-5.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-5.png)

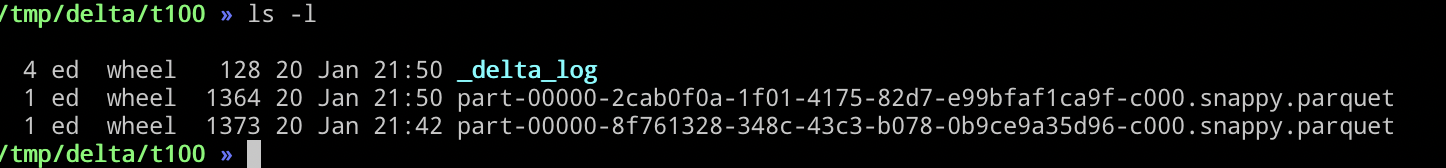

If we look in the file system we can see two files, notice that they are about the same size - this is because the second file contains the updated row as well as the original 99 rows

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-6.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-6.png)

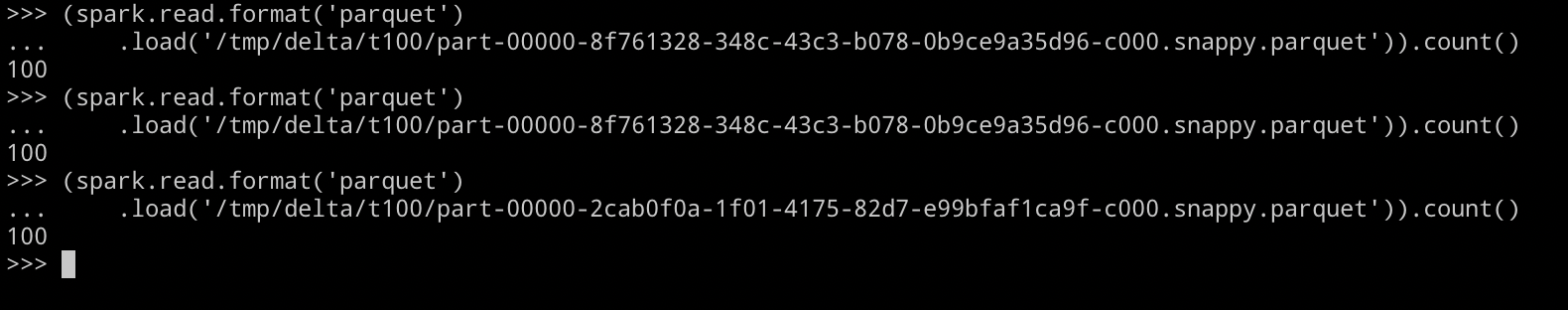

If we re-read the first file and check it and then read the second we can see that they both have one hundred rows:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-7.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-7.png)

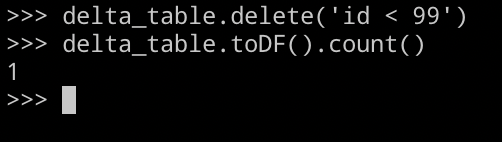

Now, what about deletes? If we delete every record apart from one:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-8.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-8.png)

We then get a new parquet file that is smaller:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-9.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-9.png)

If we load the new parquet file, we can see that it only has one row:

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-10.png)

(https://the.agilesql.club/assets/images/spark-updates/spark-updates-10.png)

So, what to remember?

The way that delta lake works is that if you update a row then that new updated row and all the existing rows are written, not just the updated rows. If a row is deleted, every row apart from the deleted rows are written out to the new parquet file. There is some additional work that goes on in the log file (json file) to say that the existing parquet files are to be ignored and to use the new one instead.