Goal of this post

So there are two goals of this post, the first is to take a look at Apache Arrow and how we can do things like show the output from DataFrame.Show, the second is to start to create objects that look more familiar to us, i.e. the DataFrame API.

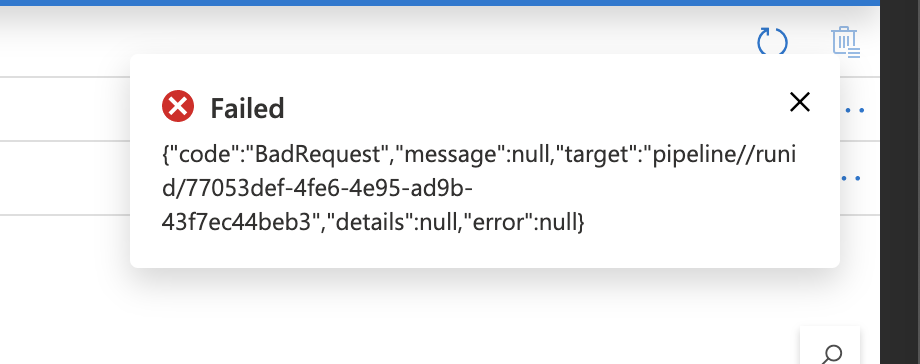

I want to take it in small steps, I 100% know that this sort of syntax is possible: